Human–robot interaction is the study of interactions between humans and robots. It is often referred as HRI by researchers. Human–robot interaction is a multidisciplinary field with contributions from human–computer interaction, artificial intelligence, robotics, natural language understanding, design, and social sciences.

Definition

Formed by the assembly of the two words “inter” and “action”, the term interaction, in its very etymology, suggests the idea of a mutual action, in reciprocity, of several elements. In the field of human relations, “interaction” intervenes as a contraction of the expression “social interaction” defined as an interpersonal relationship, between two individuals (here man / robot), in which information is shared.

The human-robot interaction is organized around several technological panels. Indeed, in order to develop robots with the ability to collaborate but also to “live” in contact with humans, researchers are working to develop learning algorithms, they study the mechanical aspect, and conduct research on materials.

Origins

Human–robot interaction has been a topic of both science fiction and academic speculation even before any robots existed. Because HRI depends on a knowledge of (sometimes natural) human communication, many aspects of HRI are continuations of human communications topics that are much older than robotics per se.

The origin of HRI as a discrete problem was stated by 20th-century author Isaac Asimov in 1941, in his novel I, Robot. He states the Three Laws of Robotics as,

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey any orders given to it by human beings, except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

These three laws of robotics determine the idea of safe interaction. The closer the human and the robot get and the more intricate the relationship becomes, the more the risk of a human being injured rises. Nowadays in advanced societies, manufacturers employing robots solve this issue by not letting humans and robots share the workspace at any time. This is achieved by defining safe zones using lidar sensors or physical cages. Thus the presence of humans is completely forbidden in the robot workspace while it is working.

With the advances of artificial intelligence, the autonomous robots could eventually have more proactive behaviors, planning their motion in complex unknown environments. These new capabilities keep safety as the primary issue and efficiency as secondary. To allow this new generation of robot, research is being conducted on human detection, motion planning, scene reconstruction, intelligent behavior through task planning and compliant behavior using force control (impedance or admittance control schemes).

The goal of HRI research is to define models of humans’ expectations regarding robot interaction to guide robot design and algorithmic development that would allow more natural and effective interaction between humans and robots. Research ranges from how humans work with remote, tele-operated unmanned vehicles to peer-to-peer collaboration with anthropomorphic robots.

Many in the field of HRI study how humans collaborate and interact and use those studies to motivate how robots should interact with humans.

The goal of friendly human–robot interactions

Robots are artificial agents with capacities of perception and action in the physical world often referred by researchers as workspace. Their use has been generalized in factories but nowadays they tend to be found in the most technologically advanced societies in such critical domains as search and rescue, military battle, mine and bomb detection, scientific exploration, law enforcement, entertainment and hospital care.

These new domains of applications imply a closer interaction with the user. The concept of closeness is to be taken in its full meaning, robots and humans share the workspace but also share goals in terms of task achievement. This close interaction needs new theoretical models, on one hand for the robotics scientists who work to improve the robots utility and on the other hand to evaluate the risks and benefits of this new “friend” for our modern society.

With the advance in AI, the research is focusing on one part towards the safest physical interaction but also on a socially correct interaction, dependent on cultural criteria. The goal is to build an intuitive, and easy communication with the robot through speech, gestures, and facial expressions.

Dautenhahn refers to friendly Human–robot interaction as “Robotiquette” defining it as the “social rules for robot behaviour (a ‘robotiquette’) that is comfortable and acceptable to humans” The robot has to adapt itself to our way of expressing desires and orders and not the contrary. But every day environments such as homes have much more complex social rules than those implied by factories or even military environments. Thus, the robot needs perceiving and understanding capacities to build dynamic models of its surroundings. It needs to categorize objects, recognize and locate humans and further their emotions. The need for dynamic capacities pushes forward every sub-field of robotics.

Furthermore, by understanding and perceiving social cues, robots can enable collaborative scenarios with humans. For example, with the rapid rise of personal fabrication machines such as desktop 3d printers, laser cutters, etc., entering our homes, scenarios may arise where robots can collaboratively share control, co-ordinate and achieve tasks together. Industrial robots have already been integrated into industrial assembly lines and are collaboratively working with humans. The social impact of such robots have been studied and has indicated that workers still treat robots and social entities, rely on social cues to understand and work together.

On the other end of HRI research the cognitive modelling of the “relationship” between human and the robots benefits the psychologists and robotic researchers the user study are often of interests on both sides. This research endeavours part of human society. For effective human – humanoid robot interaction numerous communication skills and related features should be implemented in the design of such artificial agents/systems.

Simplification of interactions

Humanization

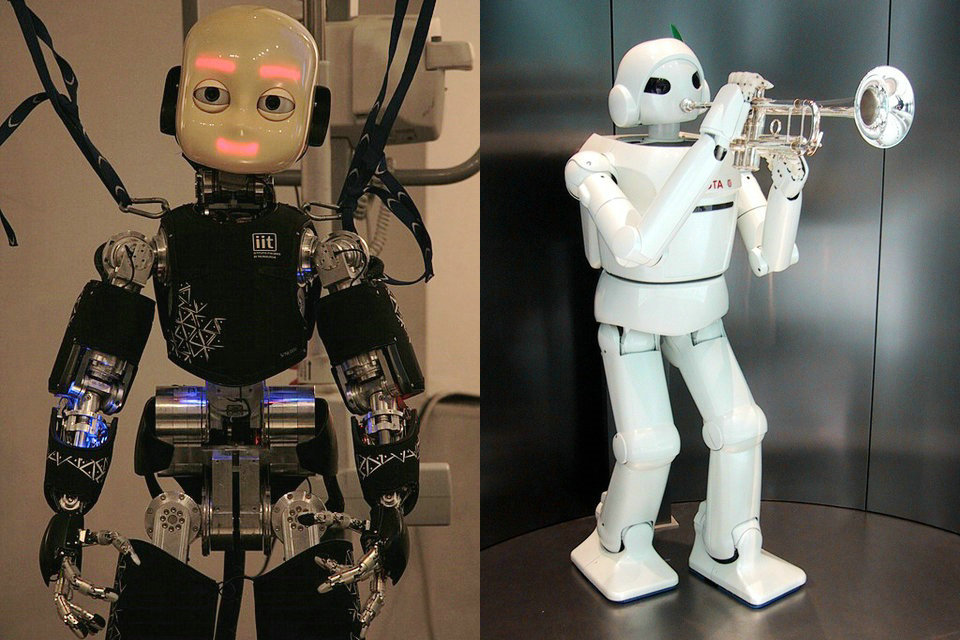

Not only is appearance important, but gestures also have a primary role. The more humanoid the appearance of the robot, the easier it will be for the man to accept his company.

To make the acceptance of the robot by man easier and ensure a natural and secure interaction, nothing is left to chance, starting with the appearance of the robot. Thus, the flexible material chosen as “skin” by robotic Robopec helps to make their robot expressive: “Reeti allows us to add interaction between the man and the robot, through a panel of emotions. Reeti’s skin is supple and deformable, so it can mimic certain emotions, “explains Christophe Rousset, founder of Robopec. In addition to an expressive face, soft and intelligent materials also allow a more sensitive touch.

In addition, reproducing human sensorimotor skills on a robot remains an essential challenge for robotics. This gap is called between artificial intelligence and sensible intelligence: Paradox of Moravec.

Autonomy

AIST (National Institute of Advanced Industrial Science and Technology of Tsukuba) in collaboration with the CNRS, have been working for 10 years to develop communication between humans and robots, including trying to create a robot totally autonomous who understands and obeys the man. To make this possible, scientists opt for a focused approach on tri-sensory perception. Through iCub, a small open source robot with three senses (sight, hearing, touch), researchers at the Italian Institute of Technology work to improve the sense of touch. iCub is a humanoid robot that can interact with its environment and humans. Covered with sensory sensors, it can recognize various objects, grasp them without crushing them and retain their name.

At Akka Technologies, engineers have incorporated a layer of artificial intelligence into the Link and Go robotic car: “The car is able to recognize the passenger, and depending on the time and context, suggest routes. The robot becomes a force of proposition. But no matter the level of intelligence, the man must always be able to take back the hand, in particular on the collaborative robots dedicated to the service. Far from the fictions of the robot capable of taking control of our lives, Rodolphe Hasselvander, director of the Center for Integrated Robotics of Ile-de-France (CRIIF) brings us back to reality: “We are not at the point of have autonomous robots. The idea is to have a robot controlled remotely.

Technological challenges

– Smart materials: to improve the sense of touch.

– Sensory sensors: to better perceive the environment.

– Calculation power: to define in real time the trajectories.

– Artificial Intelligence: to learn to recognize the environment and perform new tasks.

– Mechanics: so that the movements of the robot appear natural for the man. 3

General HRI research

HRI research spans a wide range of field, some general to the nature of HRI.

Methods for perceiving humans

Most methods intend to build a 3D model through vision of the environment. The proprioception sensors permit the robot to have information over its own state. This information is relative to a reference.

Methods for perceiving humans in the environment are based on sensor information. Research on sensing components and software led by Microsoft provide useful results for extracting the human kinematics. An example of older technique is to use colour information for example the fact that for light skinned people the hands are lighter than the clothes worn. In any case a human modelled a priori can then be fitted to the sensor data. The robot builds or has (depending on the level of autonomy the robot has) a 3D mapping of its surroundings to which is assigned the humans locations.

A speech recognition system is used to interpret human desires or commands. By combining the information inferred by proprioception, sensor and speech the human position and state (standing, seated).

Methods for motion planning

Motion planning in dynamic environment is a challenge that is for the moment only achieved for 3 to 10 degrees of freedom robots. Humanoid robots or even 2 armed robots that can have up to 40 degrees of freedom are unsuited for dynamic environments with today’s technology. However lower-dimensional robots can use potential field method to compute trajectories avoiding collisions with human.

Cognitive models and theory of mind

Humans exhibit negative social and emotional responses as well as decreased trust toward some robots that closely, but imperfectly, resemble humans; this phenomenon has been termed the “Uncanny Valley.” However recent research in telepresence robots has established that mimicking human body postures and expressive gestures has made the robots likeable and engaging in a remote setting. Further, the presence of a human operator was felt more strongly when tested with an android or humanoid telepresence robot than with normal video communication through a monitor.

While there is a growing body of research about users perceptions and emotions towards robots, we are still far from a complete understanding. Only additional experiments will determine a more precise model.

Based on past research we have some indications about current user sentiment and behavior around robots:

During initial interactions, people are more uncertain, anticipate less social presence, and have fewer positive feelings when thinking about interacting with robots. This finding has been called the human-to-human interaction script.

It has been observed that when the robot performs a proactive behaviour and does not respect a “safety distance” (by penetrating the user space) the user sometimes expresses fear. This fear response is person-dependent.

It has also been shown that when a robot has no particular use, negative feelings are often expressed. The robot is perceived as useless and its presence becomes annoying.

People have also been shown to attribute personality characteristics to the robot that were not implemented in software.

Methods for human-robot coordination

A large body of work in the field of human-robot interaction has looked at how humans and robots may better collaborate. The primary social cue for humans while collaborating is the shared perception of an activity, to this end researchers have investigated anticipatory robot control through various methods including: monitoring the behaviors of human partners using eye tracking, making inferences about human task intent, and proactive action on the part of the robot. The studies revealed that the anticipatory control helped users perform tasks faster than with reactive control alone.

A common approach to program social cues into robots is to first study human-human behaviors and then transfer the learning. For example, coordination mechanisms in human-robot collaboration are based on work in neuroscience which examined how to enable joint action in human-human configuration by studying perception and action in a social context rather than in isolation. These studies have revealed that maintaining a shared representation of the task is crucial for accomplishing tasks in groups. For example, the authors have examined the task of driving together by separating responsibilities of acceleration and braking i.e., one person is responsible for accelerating and the other for braking; the study revealed that pairs reached the same level of performance as individuals only when they received feedback about the timing of each other’s actions. Similarly, researchers have studied the aspect of human-human handovers with household scenarios like passing dining plates in order to enable an adaptive control of the same in human-robot handovers. Most recently, researchers have studied a system that automatically distributes assembly tasks among co-located workers to improve co-ordination.

Application-oriented HRI research

In addition to general HRI research, researchers are currently exploring application areas for human-robot interaction systems. Application-oriented research is used to help bring current robotics technologies to bear against problems that exist in today’s society. While human-robot interaction is still a rather young area of interest, there is active development and research in many areas.

HRI/OS research

The Human-Robot Interaction Operating System(HRI/OS), “provides a structured software framework for building human-robot teams, supports a variety of user interfaces, enables humans and robots to engage in task-oriented dialogue, and facilitates integration of robots through an extensible API”.

Search and rescue

First responders face great risks in search and rescue (SAR) settings, which typically involve environments that are unsafe for a human to travel. In addition, technology offers tools for observation that can greatly speed-up and improve the accuracy of human perception. Robots can be used to address these concerns. Research in this area includes efforts to address robot sensing, mobility, navigation, planning, integration, and tele-operated control.

SAR robots have already been deployed to environments such as the Collapse of the World Trade Center.

Other application areas include:

Entertainment

Education

Field robotics

Home and companion robotics

Hospitality

Rehabilitation and Elder Care

Robot Assisted Therapy (RAT)

Source from Wikipedia